Our pass rate is high to 98.9% and the similarity percentage between our Professional-Machine-Learning-Engineer study guide and real exam is 90% based on our seven-year educating experience. Do you want achievements in the Google Professional-Machine-Learning-Engineer exam in just one try? I am currently studying for the Google Professional-Machine-Learning-Engineer exam. Latest Google Professional-Machine-Learning-Engineer Test exam practice questions and answers, Try Google Professional-Machine-Learning-Engineer Brain Dumps First.

Google Professional-Machine-Learning-Engineer Free Dumps Questions Online, Read and Test Now.

NEW QUESTION 1

You work for a large technology company that wants to modernize their contact center. You have been asked to develop a solution to classify incoming calls by product so that requests can be more quickly routed to the correct support team. You have already transcribed the calls using the Speech-to-Text API. You want to minimize data preprocessing and development time. How should you build the model?

- A. Use the Al Platform Training built-in algorithms to create a custom model

- B. Use AutoML Natural Language to extract custom entities for classification

- C. Use the Cloud Natural Language API to extract custom entities for classification

- D. Build a custom model to identify the product keywords from the transcribed calls, and then run the keywords through a classification algorithm

Answer: A

NEW QUESTION 2

You have deployed multiple versions of an image classification model on Al Platform. You want to monitor the performance of the model versions overtime. How should you perform this comparison?

- A. Compare the loss performance for each model on a held-out dataset.

- B. Compare the loss performance for each model on the validation data

- C. Compare the receiver operating characteristic (ROC) curve for each model using the What-lf Tool

- D. Compare the mean average precision across the models using the Continuous Evaluation feature

Answer: B

NEW QUESTION 3

You are developing models to classify customer support emails. You created models with TensorFlow Estimators using small datasets on your on-premises system, but you now need to train the models using large datasets to ensure high performance. You will port your models to Google Cloud and want to minimize code refactoring and infrastructure overhead for easier migration from on-prem to cloud. What should you do?

- A. Use Al Platform for distributed training

- B. Create a cluster on Dataproc for training

- C. Create a Managed Instance Group with autoscaling

- D. Use Kubeflow Pipelines to train on a Google Kubernetes Engine cluster.

Answer: D

NEW QUESTION 4

You built and manage a production system that is responsible for predicting sales numbers. Model accuracy is crucial, because the production model is required to keep up with market changes. Since being deployed to production, the model hasn't changed; however the accuracy of the model has steadily deteriorated. What issue is most likely causing the steady decline in model accuracy?

- A. Poor data quality

- B. Lack of model retraining

- C. Too few layers in the model for capturing information

- D. Incorrect data split ratio during model training, evaluation, validation, and test

Answer: D

NEW QUESTION 5

You are an ML engineer at a large grocery retailer with stores in multiple regions. You have been asked to create an inventory prediction model. Your models features include region, location, historical demand, and seasonal popularity. You want the algorithm to learn from new inventory data on a daily basis. Which algorithms should you use to build the model?

- A. Classification

- B. Reinforcement Learning

- C. Recurrent Neural Networks (RNN)

- D. Convolutional Neural Networks (CNN)

Answer: B

NEW QUESTION 6

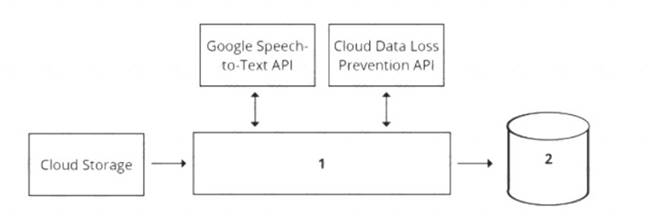

Your organization's call center has asked you to develop a model that analyzes customer sentiments in each call. The call center receives over one million calls daily, and data is stored in Cloud Storage. The data collected must not leave the region in which the call originated, and no Personally Identifiable Information (Pll) can be stored or analyzed. The data science team has a third-party tool for visualization and access which requires a SQL ANSI-2011 compliant interface. You need to select components for data processing and for analytics. How should the data pipeline be designed?

- A. 1 = Dataflow, 2 = BigQuery

- B. 1 = Pub/Sub, 2 = Datastore

- C. 1 = Dataflow, 2 = Cloud SQL

- D. 1 = Cloud Function, 2 = Cloud SQL

Answer: D

NEW QUESTION 7

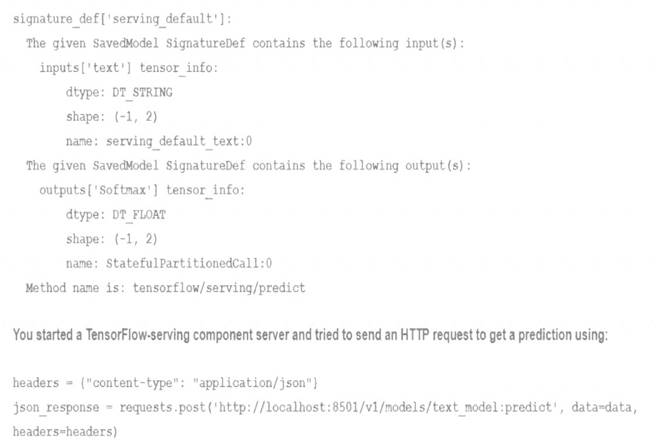

You trained a text classification model. You have the following SignatureDefs:

What is the correct way to write the predict request?

- A. data = json.dumps({"signature_name": "serving_default'\ "instances": [fab', 'be1, 'cd']]})

- B. data = json dumps({"signature_name": "serving_default"! "instances": [['a', 'b', "c", 'd', 'e', 'f']]})

- C. data = json.dumps({"signature_name": "serving_default, "instances": [['a', 'b\ 'c'1, [d\ 'e\ T]]})

- D. data = json dumps({"signature_name": f,serving_default", "instances": [['a', 'b'], [c\ 'd'], ['e\ T]]})

Answer: B

NEW QUESTION 8

You need to build classification workflows over several structured datasets currently stored in BigQuery.

Because you will be performing the classification several times, you want to complete the following steps without writing code: exploratory data analysis, feature selection, model building, training, and hyperparameter tuning and serving. What should you do?

- A. Configure AutoML Tables to perform the classification task

- B. Run a BigQuery ML task to perform logistic regression for the classification

- C. Use Al Platform Notebooks to run the classification model with pandas library

- D. Use Al Platform to run the classification model job configured for hyperparameter tuning

Answer: C

NEW QUESTION 9

You were asked to investigate failures of a production line component based on sensor readings. After receiving the dataset, you discover that less than 1% of the readings are positive examples representing failure incidents. You have tried to train several classification models, but none of them converge. How should you resolve the class imbalance problem?

- A. Use the class distribution to generate 10% positive examples

- B. Use a convolutional neural network with max pooling and softmax activation

- C. Downsample the data with upweighting to create a sample with 10% positive examples

- D. Remove negative examples until the numbers of positive and negative examples are equal

Answer: D

NEW QUESTION 10

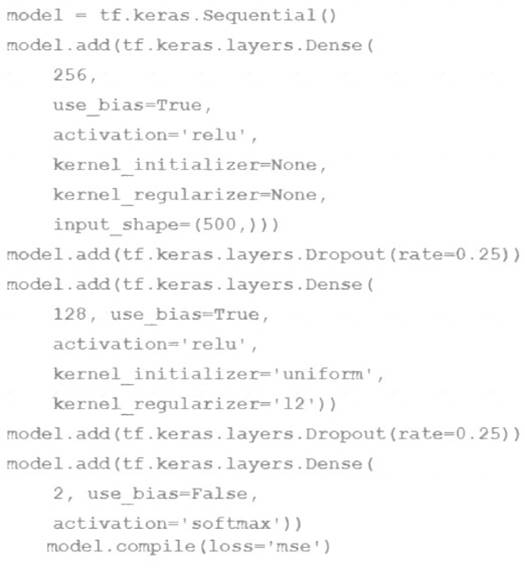

You are going to train a DNN regression model with Keras APIs using this code:

How many trainable weights does your model have? (The arithmetic below is correct.)

- A. 501*256+257*128+2 = 161154

- B. 500*256+256*128+128*2 = 161024

- C. 501*256+257*128+128*2=161408

- D. 500*256*0 25+256*128*0 25+128*2 = 40448

Answer: D

NEW QUESTION 11

Your team has been tasked with creating an ML solution in Google Cloud to classify support requests for one of your platforms. You analyzed the requirements and decided to use TensorFlow to build the classifier so that you have full control of the model's code, serving, and deployment. You will use Kubeflow pipelines for the ML platform. To save time, you want to build on existing resources and use managed services instead of building a completely new model. How should you build the classifier?

- A. Use the Natural Language API to classify support requests

- B. Use AutoML Natural Language to build the support requests classifier

- C. Use an established text classification model on Al Platform to perform transfer learning

- D. Use an established text classification model on Al Platform as-is to classify support requests

Answer: D

NEW QUESTION 12

Your data science team needs to rapidly experiment with various features, model architectures, and hyperparameters. They need to track the accuracy metrics for various experiments and use an API to query the metrics over time. What should they use to track and report their experiments while minimizing manual effort?

- A. Use Kubeflow Pipelines to execute the experiments Export the metrics file, and query the results using the Kubeflow Pipelines API.

- B. Use Al Platform Training to execute the experiments Write the accuracy metrics to BigQuery, and query the results using the BigQueryAPI.

- C. Use Al Platform Training to execute the experiments Write the accuracy metrics to Cloud Monitoring, and query the results using the Monitoring API.

- D. Use Al Platform Notebooks to execute the experiment

- E. Collect the results in a shared Google Sheetsfile, and query the results using the Google Sheets API

Answer: A

NEW QUESTION 13

You manage a team of data scientists who use a cloud-based backend system to submit training jobs. This system has become very difficult to administer, and you want to use a managed service instead. The data scientists you work with use many different frameworks, including Keras, PyTorch, theano. Scikit-team, and custom libraries. What should you do?

- A. Use the Al Platform custom containers feature to receive training jobs using any framework

- B. Configure Kubeflow to run on Google Kubernetes Engine and receive training jobs through TFJob

- C. Create a library of VM images on Compute Engine; and publish these images on a centralized repository

- D. Set up Slurm workload manager to receive jobs that can be scheduled to run on your cloud infrastructure.

Answer: D

NEW QUESTION 14

You are building a model to predict daily temperatures. You split the data randomly and then transformed the training and test datasets. Temperature data for model training is uploaded hourly. During testing, your model performed with 97% accuracy; however, after deploying to production, the model's accuracy dropped to 66%. How can you make your production model more accurate?

- A. Normalize the data for the training, and test datasets as two separate steps.

- B. Split the training and test data based on time rather than a random split to avoid leakage

- C. Add more data to your test set to ensure that you have a fair distribution and sample for testing

- D. Apply data transformations before splitting, and cross-validate to make sure that the transformations are applied to both the training and test sets.

Answer: C

NEW QUESTION 15

You recently designed and built a custom neural network that uses critical dependencies specific to your organization's framework. You need to train the model using a managed training service on Google Cloud. However, the ML framework and related dependencies are not supported by Al Platform Training. Also, both your model and your data are too large to fit in memory on a single machine. Your ML framework of choice uses the scheduler, workers, and servers distribution structure. What should you do?

- A. Use a built-in model available on Al Platform Training

- B. Build your custom container to run jobs on Al Platform Training

- C. Build your custom containers to run distributed training jobs on Al Platform Training

- D. Reconfigure your code to a ML framework with dependencies that are supported by Al Platform Training

Answer: C

NEW QUESTION 16

......

Recommend!! Get the Full Professional-Machine-Learning-Engineer dumps in VCE and PDF From Downloadfreepdf.net, Welcome to Download: https://www.downloadfreepdf.net/Professional-Machine-Learning-Engineer-pdf-download.html (New 60 Q&As Version)