Examcollection DOP-C01 Questions are updated and all DOP-C01 answers are verified by experts. Once you have completely prepared with our DOP-C01 exam prep kits you will be ready for the real DOP-C01 exam without a problem. We have Up to the minute Amazon-Web-Services DOP-C01 dumps study guide. PASSED DOP-C01 First attempt! Here What I Did.

Check DOP-C01 free dumps before getting the full version:

NEW QUESTION 1

You have just developed a new mobile application that handles analytics workloads on large scale datasets that are stored on Amazon Redshift. Consequently, the application needs to access Amazon Redshift tables. Which of the below methods would be the best, both practically and security-wise, to access the tables? Choose the correct answer from the options below

- A. Createan 1AM user and generate encryption keys for that use

- B. Create a policy for RedShiftread- only acces

- C. Embed the keys in the application.

- D. Createa HSM client certificate in Redshift and authenticate using this certificate.

- E. Createa RedShift read-only access policy in 1AM and embed those credentials in theapplication.

- F. Useroles that allow a web identity federated user to assume a role that allowsaccess to the RedShift table by providing temporary credentials.

Answer: D

Explanation:

For access to any AWS service, the ideal approach for any application is to use Roles. This is the first preference. Hence option A and C are wrong.

For more information on 1AM policies please refer to the below link: http://docs.aws.amazon.com/IAM/latest/UserGuide/access_policies.html

Next for any web application, you need to use web identity federation. Hence option D is the right option. This along with the usage of roles is highly stressed in the AWS documentation.

"When you write such an app, you'll make requests to AWS services that must be signed with an AWS access key. However, we strongly recommend that you do not embed or distribute long-term AWS credentials with apps that a user downloads to a device, even in an encrypted store. Instead, build your app so that it requests temporary AWS security credentials dynamically when needed using web identity federation. The supplied temporary credentials map to an AWS role that has only

the permissions needed to perform the tasks required by the mobile app".

For more information on web identity federation please refer to the below link: http://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_providers_oidc.html

NEW QUESTION 2

During metric analysis, your team has determined that the company's website during peak hours is experiencing response times higher than anticipated. You currently rely on Auto Scaling to make sure that you are scaling your environment during peak windows. How can you improve your Auto Scaling policy to reduce this high response time? Choose 2 answers.

- A. Push custom metrics to CloudWatch to monitor your CPU and network bandwidth from your servers, which will allow your Auto Scaling policy to have betterfine-grain insight.

- B. IncreaseyourAutoScalinggroup'snumberofmaxservers.

- C. Create a script that runs and monitors your servers; when it detects an anomaly in load, it posts to an Amazon SNS topic that triggers Elastic Load Balancing to add more servers to the load balancer.

- D. Push custom metrics to CloudWatch for your application that include more detailed information about your web application, such as how many requests it is handling and how many are waiting to be processed.

Answer: BD

Explanation:

Option B makes sense because maybe the max servers is low hence the application cannot handle the peak load.

Option D helps in ensuring Autoscaling can scale the group on the right metrics.

For more information on Autoscaling health checks, please refer to the below document link: from AWS

http://docs.aws.amazon.com/autoscaling/latest/userguide/healthcheck.html

NEW QUESTION 3

You run accounting software in the AWS cloud. This software needs to be online continuously during the day every day of the week, and has a very static requirement for compute resources. You also have other, unrelated batch jobs that need to run once per day at anytime of your choosing. How should you minimize cost?

- A. Purchase a Heavy Utilization Reserved Instance to run the accounting softwar

- B. Turn it off after hour

- C. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- D. Purch ase a Medium Utilization Reserved Instance to run the accounting softwar

- E. Turn it off after hour

- F. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- G. Purchase a Light Utilization Reserved Instance to run the accounting softwar

- H. Turn it off after hour

- I. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

- J. Purch ase a Full Utilization Reserved Instance to run the accounting softwar

- K. Turn it off after hour

- L. Run the batch jobs with the same instance class, so the Reserved Instance credits are also applied to the batch jobs.

Answer: A

Explanation:

Reserved Instances provide you with a significant discount compared to On-Demand Instance pricing.

Reserved Instances are not physical instances, but rather a

billing discount applied to the use of On-Demand Instances in your account. These On-Demand Instances must match certain attributes in order to benefit from the

billing discount

For more information, please refer to the below link:

• https://aws.amazon.com/about-aws/whats-new/2011/12/01/New-Amazon-CC2-Reserved- lnstances-Options-Now-Available/

• https://aws.amazon.com/blogs/aws/reserved-instance-options-for-amazon-ec2/

• http://docs.aws.a mazon.com/AWSCC2/latest/UserGuide/ec2-reserved-instances.html Note:

It looks like these options are also no more available at present.

It looks like Convertible, Standard and scheduled are the new instance options. However the exams may still be referring to the old RIs. https://aws.amazon.com/ec2/pricing/reserved-instances/

NEW QUESTION 4

You have deployed an application to AWS which makes use of Autoscaling to launch new instances. You now want to change the instance type for the new instances. Which of the following is one of the action items to achieve this deployment?

- A. Use Elastic Beanstalk to deploy the new application with the new instance type

- B. Use Cloudformation to deploy the new application with the new instance type

- C. Create a new launch configuration with the new instance type

- D. Create new EC2 instances with the new instance type and attach it to the Autoscaling Group

Answer: C

Explanation:

The ideal way is to create a new launch configuration, attach it to the existing Auto Scaling group, and terminate the running instances.

Option A is invalid because Clastic beanstalk cannot launch new instances on demand. Since the current scenario requires Autoscaling, this is not the ideal option

Option B is invalid because this will be a maintenance overhead, since you just have an Autoscaling Group. There is no need to create a whole Cloudformation

template for this.

Option D is invalid because Autoscaling Group will still launch CC2 instances with the older launch configuration

For more information on Autoscaling Launch configuration, please refer to the below document link: from AWS

http://docs.aws.amazon.com/autoscaling/latest/userguide/l_aunchConfiguration.html

NEW QUESTION 5

You need to monitor specific metrics from your application and send real-time alerts to your Devops Engineer. Which of the below services will fulfil this requirement? Choose two answers

- A. Amazon CloudWatch

- B. Amazon Simple Notification Service

- C. Amazon Simple Queue Service

- D. Amazon Simple Email Service

Answer: AB

Explanation:

Amazon Cloud Watch monitors your Amazon Web Services (AWS) resources and the applications you run on AWS in real time. You can use Cloud Watch to collect and track metrics, which are variables you can measure for your resources and applications. Cloud Watch alarms send notifications or automatically make changes to the resources you are monitoring based on rules that you define.

For more information on AWS Cloudwatch, please refer to the below document link: from AWS

• http://docs.aws.a mazon.com/AmazonCloudWatch/latest/monitoring/WhatlsCloud Watch.htm I Amazon Cloud Watch uses Amazon SNS to send email. First, create and subscribe to an SNS topic.

When you create a CloudWatch alarm, you can add this SNS topic to send an email notification when the alarm changes state

For more information on AWS Cloudwatch and SNS, please refer to the below document link: from AWS

http://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/US_SetupSNS.html

NEW QUESTION 6

You currently have EC2 Instances hosting an application. These instances are part of an Autoscaling Group. You now want to change the instance type of the EC2 Instances. How can you manage the deployment with the least amount of downtime

- A. Terminate the existing Auto Scalinggrou

- B. Create a new launch configuration with the new Instance typ

- C. Attach that to the new Autoscaing Group.

- D. Use the AutoScalingRollingUpdate policy on CloudFormation Template Auto Scalinggroup

- E. Use the Rolling Update feature which is available for EC2 Instances.

- F. Manually terminate the instances, launch new instances with the new instance type and attach them to the Autoscaling group

Answer: B

Explanation:

The AWS::AutoScaling::AutoScalingGroup resource supports an UpdatePolicy attribute. This is used to define how an Auto Scalinggroup resource is updated when

an update to the Cloud Formation stack occurs. A common approach to updating an Auto Scaling group is to perform a rolling update, which is done by specifying the

AutoScalingRollingUpdate policy. This retains the same Auto Scalinggroup and replaces old instances with new ones, according to the parameters specified.

For more information on AutoScaling Rolling Update, please refer to the below link:

• https://aws.amazon.com/premiumsupport/knowledge-center/auto-scaling-group-rolling- updates/

NEW QUESTION 7

Your company releases new features with high frequency while demanding high application availability. As part of the application's A/B testing, logs from each updated Amazon EC2 instance of the application need to be analyzed in near real-time, to ensure that the application is working

flawlessly after each deployment. If the logs show any anomalous behavior, then the application version of the instance is changed to a more stable one. Which of the following methods should you use for shipping and analyzing the logs in a highly available manner?

- A. Ship the logs to Amazon S3 for durability and use Amazon EMR to analyze the logs in a batch manner each hour.

- B. Ship the logs to Amazon CloudWatch Logs and use Amazon EMR to analyze the logs in a batch manner each hour.

- C. Ship the logs to an Amazon Kinesis stream and have the consumers analyze the logs in a live manner.

- D. Ship the logs to a large Amazon EC2 instance and analyze the logs in a live manner.

Answer: C

Explanation:

Answer - C

You can use Kinesis Streams for rapid and continuous data intake and aggregation. The type of data used includes IT infrastructure log data, application logs, social media, market data feeds, and web clickstream data. Because the response time for the data intake and processing is in real time, the processing is typically lightweight.

The following are typical scenarios for using Kinesis Streams:

• Accelerated log and data feed intake and processing - You can have producers push data directly into a stream. For example, push system and application logs and they'll be available for processing in seconds. This prevents the log data from being lost if the front end or application server fails. Kinesis Streams provides accelerated data feed intake because you don't batch the data on the servers before you submit it for intake.

• Real-time metrics and reporting - You can use data collected into Kinesis Streams for simple data analysis and reporting in real time. For example, your data-processing application can work on metrics and reporting for system and application logs as the data is streaming in, rather than wait to receive batches of data.

For more information on Amazon Kinesis and SNS please refer to the below link:

• http://docs.aws.a mazon.com/streams/latest/dev/introduction.html

NEW QUESTION 8

When thinking of AWS Elastic Beanstalk's model, which is true?

- A. Applications have many deployments, deployments have many environments.

- B. Environments have many applications, applications have many deployments.

- C. Applications have many environments, environments have many deployments.

- D. Deployments have many environments, environments have many applications.

Answer: C

Explanation:

The first step in using Elastic Beanstalk is to create an application, which represents your web application in AWS. In Elastic Beanstalk an application serves as a

container for the environments that run your web app, and versions of your web app's source code, saved configurations, logs and other artifacts that you create

while using Elastic Beanstalk.

For more information on Applications, please refer to the below link: http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/applications.html

Deploying a new version of your application to an environment is typically a fairly quick process. The new source bundle is deployed to an instance and extracted, and the the web container or application server picks up the new version and restarts if necessary. During deployment, your application might still become unavailable to users for a few seconds. You can prevent this by configuring your environment to use rolling deployments to deploy the new version to instances in batches. For more information on deployment, please refer to the below link: http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/using-features.de ploy-existing-version, html

NEW QUESTION 9

You are creating a cloudformation templates which takes in a database password as a parameter. How can you ensure that the password is not visible when anybody tries to describes the stack

- A. Usethe password attribute for the resource

- B. Usethe NoEcho property for the parameter value

- C. Usethe hidden property for the parameter value

- D. Setthe hidden attribute for the Cloudformation resource.

Answer: B

Explanation:

The AWS Documentation mentions

For sensitive parameter values (such as passwords), set the NoEcho property to true. That way, whenever anyone describes your stack, the parameter value is shown as asterisks (*•*").

For more information on Cloudformation parameters, please visit the below URL:

• http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/parameters-section- structure.html

NEW QUESTION 10

Your company currently has a set of EC2 Instances sitting behind an Elastic Load Balancer. There is a requirement to create an Opswork stack to host the newer version of this application. The idea is to

first get the stack in place, carry out a level of testing and then deploy it at a later stage. The Opswork stack and layers have been setup. To complete the testing process, the current ELB is being utilized. But you have now noticed that your current application has stopped responding to requests. Why is this the case.

- A. Thisis because the Opswork stack is utilizing the current instances after the ELBwas attached as a layer.

- B. Youhave configured the Opswork stack to deploy new instances in the same domainthe older instances.

- C. TheELB would have deregistered the older instances

- D. Thisis because the Opswork web layer is utilizing the current instances after theELB was attached as an additional layer.

Answer: C

Explanation:

The AWS Documentation mentions the following

If you choose to use an existing Clastic Load Balancing load balancer, you should first confirm that it is not being used for other purposes and has no attached instances. After the load balancer is attached to the layer, OpsWorks removes any existing instances and configures the load balancer to handle only the layer'sinstances. Although it is technically possible to use the Clastic Load Balancing console or API to modify a load balancer's configuration after attaching it to a layer, you should not do so; the changes will not be permanent.

For more information on Opswork CLB layers, please visit the below URL:

• http://docs.aws.a mazon.com/opsworks/latest/userguide/layers-elb.html

NEW QUESTION 11

You recently encountered a major bug in your web application during a deployment cycle. During this failed deployment, it took the team four hours to roll back to a previously working state, which left customers with a poor user experience. During the post-mortem, you team discussed the need to provide a quicker, more robust way to roll back failed deployments. You currently run your web application on Amazon EC2 and use Elastic Load Balancingforyour load balancing needs.

Which technique should you use to solve this problem?

- A. Createdeployable versioned bundles of your applicatio

- B. Store the bundle on AmazonS3. Re- deploy your web application on Elastic Beanstalk and enable the ElasticBeanstalk auto - rollbackfeature tied to Cloud Watch metrics that definefailure.

- C. Usean AWS OpsWorks stack to re-deploy your web application and use AWS OpsWorksDeploymentCommand to initiate a rollback during failures.

- D. Createdeployable versioned bundles of your applicatio

- E. Store the bundle on AmazonS3. Use an AWS OpsWorks stack to redeploy your web application and use AWSOpsWorks application versioningto initiate a rollback during failures.

- F. UsingElastic BeanStalk redeploy your web application and use the Elastic BeanStalkAPI to trigger a FailedDeployment API call to initiate a rollback to theprevious version.

Answer: B

Explanation:

The AWS Documentation mentions the following

AWS DeploymentCommand has a rollback option in it. Following commands are available for apps to use:

deploy: Deploy App.

Ruby on Rails apps have an optional args parameter named migrate. Set Args to {"migrate":["true"]) to migrate the database.

The default setting is {"migrate": ["false"]).

The "rollback" feature Rolls the app back to the previous version.

When we are updating an app, AWS OpsWorks stores the previous versions, maximum of upto five versions.

We can use this command to roll an app back as many as four versions. Reference Link:

• http://docs^ws.amazon.com/opsworks/latest/APIReference/API_DeploymentCommand.html

NEW QUESTION 12

You are writing an AWS Cloud Formation template and you want to assign values to properties that will not be available until runtime. You know that you can use intrinsic functions to do this but are unsure as to which part of the template they can be used in. Which of the following is correct in describing how you can currently use intrinsic functions in an AWS CloudFormation template?

- A. Youcan use intrinsic functions in any part of a template.

- B. Youcan only use intrinsic functions in specific parts of a templat

- C. You can useintrinsic functions in resource properties, metadata attributes, and updatepolicy attributes.

- D. Youcan use intrinsic functions only in the resource properties part of a template.

- E. Youcanuse intrinsic functions in any part of a template, exceptAWSTemplateFormatVersion and Description.

Answer: B

Explanation:

This is clearly given in the aws documentation. Intrinsic Function Reference

AWS Cloud Formation provides several built-in functions that help you manage your stacks. Use intrinsic functions in your templates to assign values to properties that are not available until runtime. Note

You can use intrinsic functions only in specific parts of a template. Currently, you can use intrinsic functions in resource properties, outputs, metadata attributes, and update policy attributes. You can also use intrinsic functions to conditionally create stack resources. For more information on intrinsic function please refer to the below link https://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/intrinsic-function -reference, html

NEW QUESTION 13

Your development team use .Net to code their web application. They want to deploy it to AWS for the purpose of continuous integration and deployment. The application code is hosted in a Git repository. Which of the following combination of steps can be used to fulfil this requirement. Choose 2 answers from the options given below

- A. Use the Elastic beanstalk service to provision an IIS platform web environment to host the application.

- B. Use the Code Pipeline service to provision an IIS environment to host the application.

- C. Create a source bundle for the .Net code and upload it as an application revision.

- D. Use a chef recipe to deploy the code and attach it to the Elastic beanstalk environment.

Answer: AC

Explanation:

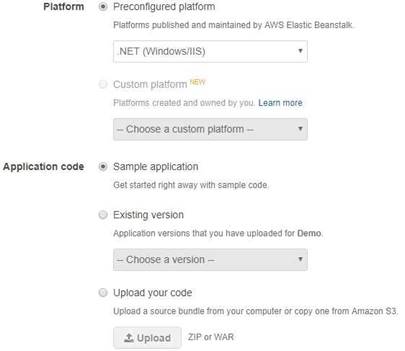

When you provision an environment using the Clastic beanstalk service, you can choose the IIS platform to host the .Net based application as shown below.

You can also upload the application as a zip file and specify it as an application revision.

For more information on Elastic beanstalk and .Net environments, please refer to the below link: http://docs^ws.amazon.com/elasticbeanstalk/latest/dg/create_deploy_NCT.html

NEW QUESTION 14

Which of the below services can be used to deploy application code content stored in Amazon S3 buckets, GitHub repositories, or Bitbucket repositories

- A. CodeCommit

- B. CodeDeploy

- C. S3Lifecycles

- D. Route53

Answer: B

Explanation:

The AWS documentation mentions

AWS CodeDeploy is a deployment service that automates application deployments to Amazon EC2 instances or on-premises instances in your own facility.

For more information on Code Deploy please refer to the below link:

• http://docs.ws.amazon.com/codedeploy/latest/userguide/welcome.html

NEW QUESTION 15

Which of the following CLI commands is used to spin up new EC2 Instances?

- A. awsec2 run-instances

- B. awsec2 create-instances

- C. awsec2 new-instancesD- awsec2 launch-instances

Answer: A

Explanation:

The AWS Documentation mentions the following

Launches the specified number of instances using an AMI for which you have permissions. You can specify a number of options, or leave the default options. The following rules apply:

[EC2-VPC] If you don't specify a subnet ID. we choose a default subnet from your default VPC for you. If you don't have a default VPC, you must specify a subnet ID in the request.

[EC2-Classic] If don't specify an Availability Zone, we choose one for you.

Some instance types must be launched into a VPC. if you do not have a default VPC. or if you do not specify a subnet ID. the request fails. For more information, see Instance Types Available Only in a VPC.

[EC2-VPC] All instances have a network interface with a primary private IPv4 address. If you don't specify this address, we choose one from the IPv4 range of your subnet.

Not all instance types support IPv6 addresses. For more information, see Instance Types.

If you don't specify a security group ID, we use the default security group. For more information, see Security Groups.

If any of the AMIs have a product code attached for which the user has not subscribed, the request fails. For more information on the Cc2 run instance command please refer to the below link http://docs.aws.a mazon.com/cli/latest/reference/ec2/run-instances.html

NEW QUESTION 16

Which of these is not an instrinsic function in AWS CloudFormation?

- A. Fn::Equals

- B. Fn::lf

- C. Fn::Not

- D. Fn::Parse

Answer: D

Explanation:

You can use intrinsic functions, such as Fn::lf, Fn::Cquals, and Fn::Not, to conditionally create stack resources. These conditions are evaluated based on input parameters that you declare when you create or update a stack. After you define all your conditions, you can associate them with resources or resource properties in the Resources and Outputs sections of a template.

For more information on Cloud Formation template functions, please refer to the URL:

• http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/intrinsic-function- reference.html and

• http://docs.aws.a mazon.com/AWSCIoudFormation/latest/UserGuide/intri nsic-function- reference-conditions.html

NEW QUESTION 17

Your company has an application hosted in AWS which makes use of DynamoDB. There is a requirement from the IT security department to ensure that all source IP addresses which make calls to the DynamoDB tables are recorded. Which of the following services can be used to ensure this requirement is fulfilled.

- A. AWSCode Commit

- B. AWSCode Pipeline

- C. AWSCIoudTrail

- D. AWSCIoudwatch

Answer: C

Explanation:

The AWS Documentation mentions the following

DynamoDB is integrated with CloudTrail, a service that captures low-level API requests made by or

on behalf of DynamoDB in your AWS account and delivers the log files to an Amazon S3 bucket that you specify. CloudTrail captures calls made from the DynamoDB console or from the DynamoDB low-level API. Using the information collected by CloudTrail, you can determine what request was made to DynamoDB, the source IP address from which the request was made, who made the request, when it was made, and so on.

For more information on DynamoDB and Cloudtrail, please refer to the below link:

• http://docs.aws.amazon.com/amazondynamodb/latest/developerguide/logging-using- cloudtrail.htmI

NEW QUESTION 18

Your current log analysis application takes more than four hours to generate a report of the top 10 users of your web application. You have been asked to implement a system that can report this information in real time, ensure that the report is always up to date, and handle increases in the number of requests to your web application. Choose the option that is cost-effective and can fulfill the

requirements.

- A. Publishyour data to CloudWatch Logs, and configure your application to autoscale tohandle the load on demand.

- B. Publishyour log data to an Amazon S3 bucke

- C. Use AWS CloudFormation to create an AutoScalinggroup to scale your post-processing application which is configured topull down your log files stored in Amazon S3.

- D. Postyour log data to an Amazon Kinesis data stream, and subscribe yourlog-processing application so that is configured to process your logging data.

- E. Configurean Auto Scalinggroup to increase the size of your Amazon EMR cluster

Answer: C

Explanation:

The AWS Documentation mentions the below

Amazon Kinesis makes it easy to collect, process, and analyze real-time, streaming data so you can get timely insights and react quickly to new information. Amazon

Kinesis offers key capabilities to cost effectively process streaming data at any scale, along with the flexibility to choose the tools that best suit the requirements of

your application. With Amazon Kinesis, you can ingest real-time data such as application logs, website clickstreams, loT telemetry data, and more into your

databases, data lakes and data warehouses, or build your own real-time applications using this data.

Amazon Kinesis enables you to process and analyze data as it

arrives and respond in real-time instead of having to wait until all your data is collected before the processing can begin.

For more information on AWS Kinesis please see the below link:

• https://aws.amazon.com/kinesis/

NEW QUESTION 19

Which of the following tools from AWS allows the automatic collection of software inventory from EC2 instances and helps apply OS patches.

- A. AWSCode Deploy

- B. EC2Systems Manager

- C. EC2AMI's

- D. AWSCode Pipeline

Answer: B

Explanation:

The Amazon CC2 Systems Manager helps you automatically collect software inventory, apply OS patches, create system images, and configure Windows and Linux operating systems. These capabilities enable automated configuration and ongoing management of systems at scale, and help maintain software compliance for instances running in Amazon L~C2 or on-premises.

One feature within Systems Manager is Automation, which can be used to patch, update agents, or bake applications into an Amazon Machine Image (AMI). With

Automation, you can avoid the time and effort associated with manual image updates, and instead build AMIs through a streamlined, repeatable, and auditable process.

For more information on EC2 Systems manager, please refer to the below link:

• https://aws.amazon.com/blogs/aws/streamline-ami-maintenance-and-patching-using-amazon- ec2-systems-manager-automation/

NEW QUESTION 20

As part of your continuous deployment process, your application undergoes an I/O load performance test before it is deployed to production using new AMIs. The application uses one Amazon Elastic Block Store (EBS) PIOPS volume per instance and requires consistent I/O performance. Which of the following must be carried out to ensure that I/O load performance tests yield the correct results in a repeatable manner?

- A. Ensure that the I/O block sizes for the test are randomly selected.

- B. Ensure that the Amazon EBS volumes have been pre-warmed by reading all the blocks before the test.

- C. Ensure that snapshots of the Amazon EBS volumes are created as a backup.

- D. Ensure that the Amazon EBS volume is encrypted.

Answer: B

Explanation:

During the AMI-creation process, Amazon CC2 creates snapshots of your instance's root volume and any other CBS volumes attached to your instance

New CBS volumes receive their maximum performance the moment that they are available and do not require initialization (formerly known as pre-warming).

However, storage blocks on volumes that were restored from snapshots must to initialized (pulled

down from Amazon S3 and written to the volume) before you can access the block. This preliminary action takes time and can cause a significant increase in the latency of an I/O operation the first time each block is accessed. For most applications, amortizing this cost over the lifetime of the volume is acceptable.

Option A is invalid because block sizes are predetermined and should not be randomly selected. Option C is invalid because this is part of continuous integration and hence volumes can be destroyed after the test and hence there should not be snapshots created unnecessarily

Option D is invalid because the encryption is a security feature and not part of load tests normally. For more information on CBS initialization please refer to the below link:

• http://docs.aws.a mazon.com/AWSCC2/latest/UserGuide/ebs-in itialize.html

NEW QUESTION 21

You have a set of EC2 instances hosted in AWS. You have created a role named DemoRole and assigned that role to a policy, but you are unable to use that role with an instance. Why is this the case.

- A. You need to create an instance profile and associate it with that specific role.

- B. You are not able to associate an 1AM role with an instanceC You won't be able to use that role with an instance unless you also create a user and associate it with that specific role

- C. You won't be able to use that role with an instance unless you also create a usergroup and associate it with that specific role.

Answer: A

Explanation:

An instance profile is a container for an 1AM role that you can use to pass role information to an CC2 instance when the instance starts.

Option B is invalid because you can associate a role with an instance

Option C and D are invalid because using users or user groups is not a pre-requisite For more information on instance profiles, please visit the link:

• http://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use_switch-ro le-ec2_instance- profiles.htm I

NEW QUESTION 22

You have a multi-docker environment that you want to deploy to AWS. Which of the following configuration files can be used to deploy a set of Docker containers as an Elastic Beanstalk application?

- A. Dockerrun.awsjson

- B. .ebextensions

- C. Dockerrunjson

- D. Dockerfile

Answer: A

Explanation:

A Dockerrun.aws.json file is an Clastic Beanstalk-specific JSON file that describes how to deploy a set of Docker containers as an Clastic Beanstalk application. You can use aDockerrun.aws.json file for a multicontainer Docker environment.

Dockerrun.aws.json describes the containers to deploy to each container instance in the environment as well as the data volumes to create on the host instance for

the containers to mount. http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/create_deploy_docker_v2config.html

NEW QUESTION 23

Your application is currently running on Amazon EC2 instances behind a load balancer. Your management has decided to use a Blue/Green deployment strategy. How should you implement this for each deployment?

- A. Set up Amazon Route 53 health checks to fail over from any Amazon EC2 instance that is currently being deployed to.

- B. Using AWS CloudFormation, create a test stack for validating the code, and then deploy the code to each production Amazon EC2 instance.

- C. Create a new load balancer with new Amazon EC2 instances, carry out the deployment, and then switch DNS over to the new load balancer using Amazon Route 53 after testing.

- D. Launch more Amazon EC2 instances to ensure high availability, de-register each Amazon EC2 instance from the load balancer, upgrade it, and test it, and then register it again with the load balancer.

Answer: C

Explanation:

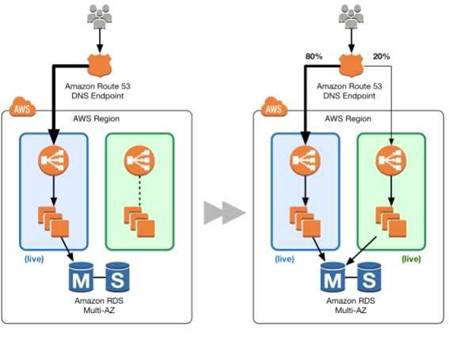

The below diagram shows how this can be done

1) First create a new ELB which will be used to point to the new production changes.

2) Use the Weighted Route policy for Route53 to distribute the traffic to the 2 ELB's based on a 80- 20% traffic scenario. This is the normal case, the % can be changed based on the requirement.

3) Finally when all changes have been tested, Route53 can be set to 100% for the new ELB.

Option A is incorrect because this is a failover scenario and cannot be used for Blue green deployments. In Blue Green deployments, you need to have 2 environments running side by side. Option B is incorrect, because you need to a have a production stack with the changes which will run side by side.

Option D is incorrect because this is not a blue green deployment scenario. You cannot control which users will go the new EC2 instances.

For more information on blue green deployments, please refer to the below document link: from AWS

https://dOawsstatic.com/whitepapers/AWS_Blue_Green_Deployments.pdf

NEW QUESTION 24

After a daily scrum with your development teams, you've agreed that using Blue/Green style deployments would benefit the team. Which technique should you use to deliver this new requirement?

- A. Re-deploy your application on AWS Elastic Beanstalk, and take advantage of Elastic Beanstalk deployment types.

- B. Using an AWS CloudFormation template, re-deploy your application behind a load balancer, launch a new AWS CloudFormation stack during each deployment, update your load balancer to send half your traffic to the new stack while you test, after verification update the load balancer to send 100% of traffic to the new stack, and then terminate the old stack.

- C. Create a new Autoscaling group with the new launch configuration and desired capacity same as that of the initial Autoscaling group andassociate it with the same load balance

- D. Once the new AutoScaling group's instances got registered with ELB, modify the desired capacity of the initial AutoScal ing group to zero and gradually delete the old Auto scaling grou

- E. •>/

- F. Using an AWS OpsWorks stack, re-deploy your application behind an Elastic Load Balancing load balancer and take advantage of OpsWorks stack versioning, during deployment create a new version of your application, tell OpsWorks to launch the new version behind your load balancer, and when the new version is launched, terminate the old OpsWorks stack.

Answer: C

Explanation:

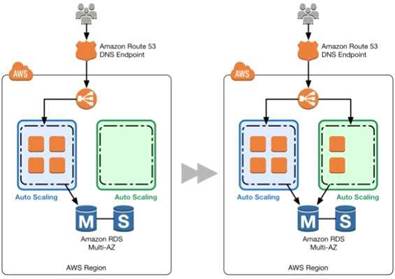

This is given as a practice in the Green Deployment Guides

A blue group carries the production load while a green group is staged and deployed with the new code. When if s time to deploy, you simply attach the green group to

the existing load balancer to introduce traffic to the new environment. For HTTP/HTTP'S listeners, the load balancer favors the green Auto Scaling group because it uses a least outstanding requests routing algorithm

As you scale up the green Auto Scaling group, you can take blue Auto Scaling group instances out of service by either terminating them or putting them in Standby state.

For more information on Blue Green Deployments, please refer to the below document link: from AWS

https://dOawsstatic.com/whitepapers/AWS_Blue_Green_Deployments.pdf

NEW QUESTION 25

When building a multicontainer Docker platform using Elastic Beanstalk, which of the following is required

- A. DockerFile to create custom images during deployment

- B. Prebuilt Images stored in a public or private online image repository.

- C. Kurbernetes to manage the docker containers.

- D. RedHatOpensift to manage the docker containers.

Answer: B

Explanation:

This is a special note given in the AWS Documentation for Multicontainer Docker platform for Elastic Beanstalk

Building custom images during deployment with a Dockerfile is not supported by the multicontainer Docker platform on Elastic Beanstalk. Build your images and

deploy them to an online repository before creating an Elastic Beanstalk environment.

For more information on Multicontainer Docker platform for Elastic Beanstalk, please refer to the below link:

http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/create_deploy_docker_ecs.html

NEW QUESTION 26

......

P.S. Dumpscollection.com now are offering 100% pass ensure DOP-C01 dumps! All DOP-C01 exam questions have been updated with correct answers: https://www.dumpscollection.net/dumps/DOP-C01/ (116 New Questions)